Hello I'm

Mehul Goel

@root: Tinkerer. Developer. Innovator

@root: Tinkerer. Developer. Innovator

☑ Created a fullstack REST API language server to support an internal file

format used company-wide, delivering the project in under 5 weeks and enabling usage by over 200

employees.

☑ Implemented the Language Server Protocol (LSP) using asynchronous Python libraries to

efficiently process and analyze files exceeding 100,000 lines, ensuring responsiveness and

scalability.

☑ Led end-to-end development and deployment, shipping the project with 10 standalone

features while actively collaborating with cross-functional stakeholders across engineering and

product teams.

Built and shipped a fullstack REST API language server in under 5 weeks, handling 100,000+ line files using async Python, supporting 10 features, and now used by 200+ employees.

☑ Developed Computer Vision implementation based off ResNet to segment

internals of 36 different iPhone models

☑ Implemented SORT algorithm for segmenting parts on a conveyor belt moving at 5 m/s at

real-time (> 60 FPS) with 96% IOU accuracy

☑ Used YOLO V8 Image Classification to train a top-down model to detect screws from 3

different angles on 720p cameras on 36 models of iPhones, 12 models of iPads, and 4 models of

Apple Watches. Led the creation of a demonstration video that was presented to Apple and leads

of 100+ member lab.

Developed CV implementation to segment internals of 36 different iPhone models into 10 classes, and created a screw detection software for iPhones, iPads, and Apple Watches.

☑ Developed performance modeling software to assist in chip development

with 97% accurate benchmarks on a variety of ML workloads (BERT, ResNet50, etc.).

☑ Improved prior software hardware resource utilization by over 46% with a novel weighted

round-robin load management solution.

☑ Created a memory modeling software that modeled memory packets throughout the chip, and

the variety of paths to identify bottlenecks in current designs.

Developed performance modeling software to assist in chip development and created a memory modeling software for 5+ ML Workloads

☑ Published and presented this paper to over 30 audience members, passing

a rigorous approval period with 2 separate peer-revision trials.

☑ Built universal modeling software for variety of different silicons to test against 10+

ML Workloads, compatible with CPUs, GPUs, and even TPUs.

☑ Modeled memory and performance accuracy of Google TPU and other hardware within 5% of

lab-tested measurements.

Published peer-reviewed paper at AAAI discussing a universal ML modeling software for a variety of different silicon

☑ Led a team of 10 in building out autonomous vehicle driving and passing, including

position communication, implementing a Model Predictive Controller, and racing at 30 mph.

☑ Innovating a LiDAR + Stereo Camera setup for perception of surrounding vehicles for

autonomous zero aid passing.

☑ Assisted in development of a new website to improve traffic, potential member interest,

and promotion for sponsors.

☑ Control security of 3 remote workstations, a variety of 3D printers that can be accessed

by the 100+ members of the club.

Led a team of 10 in building out autonomous vehicle driving and passing, including onboard LiDAR based object detection.

RoboBuggy is an autonmous vehicle that is developed over the course of 6 months, with students getting up at 5am to get a brief moment to test their vehicles in a real world scenario. Through controls, path planning, and perception, I've worked on a variety of incredible projects.

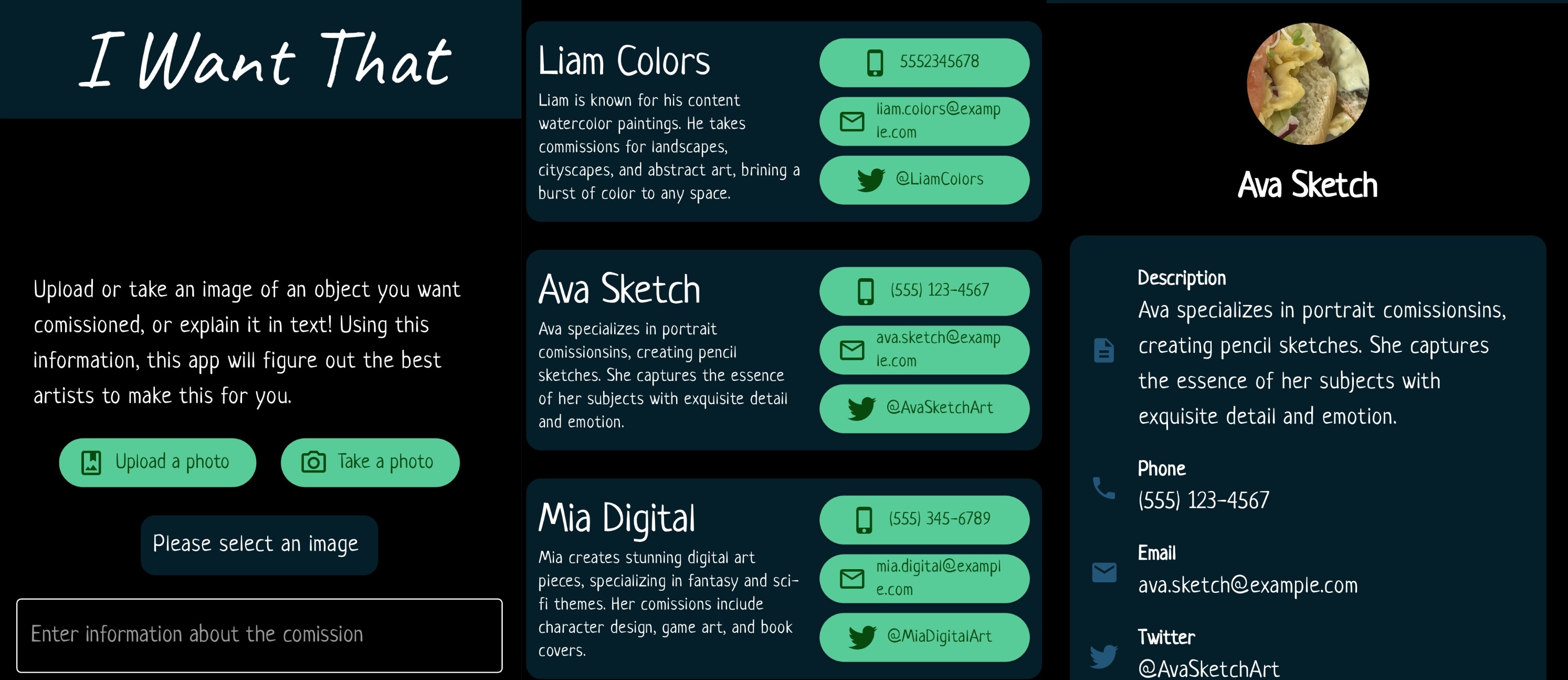

I Want That is a mobile application created to help users find the best artists to create the custom comissions that they envision. It does this through providing a reference image for the comissioned piece, which is described by Google's Gemini AI, which afterwards we use to semantically search our existing database of artists in order to find the top 3 artist.

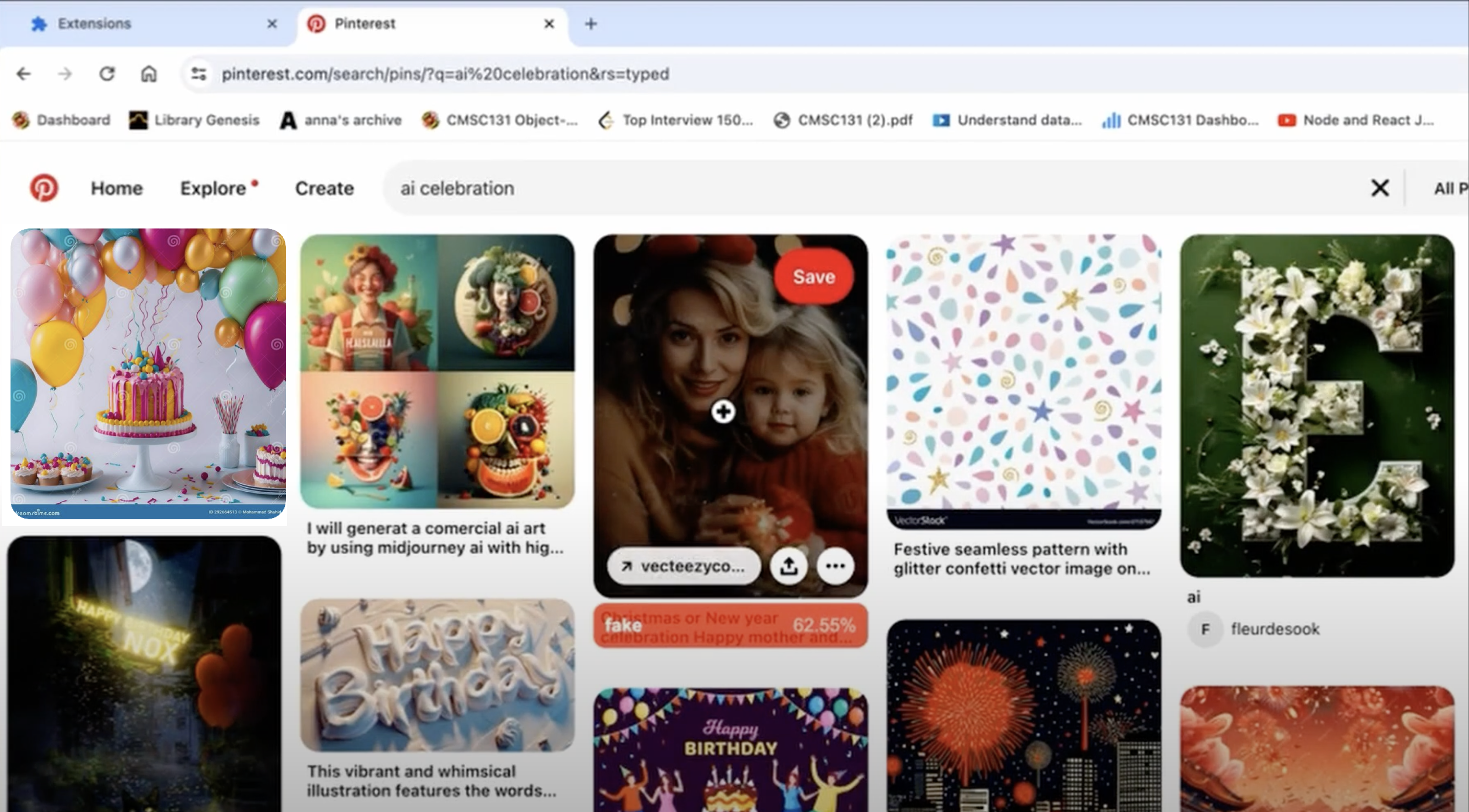

Audit-AI is a chrome extension built around websites like Pinterest and Reddit, that determines if an image is AI-Generated or not. This is done by training a dataset over a YoloV8 image classification model, that had an overall accuracy of 98% on the provided dataset, and an estimated accuracy of 75% based upon real-world testing. The backend was written in primarily Python and deployed on a local web server, while the front end consisted of a ReactJS based chrome extension.

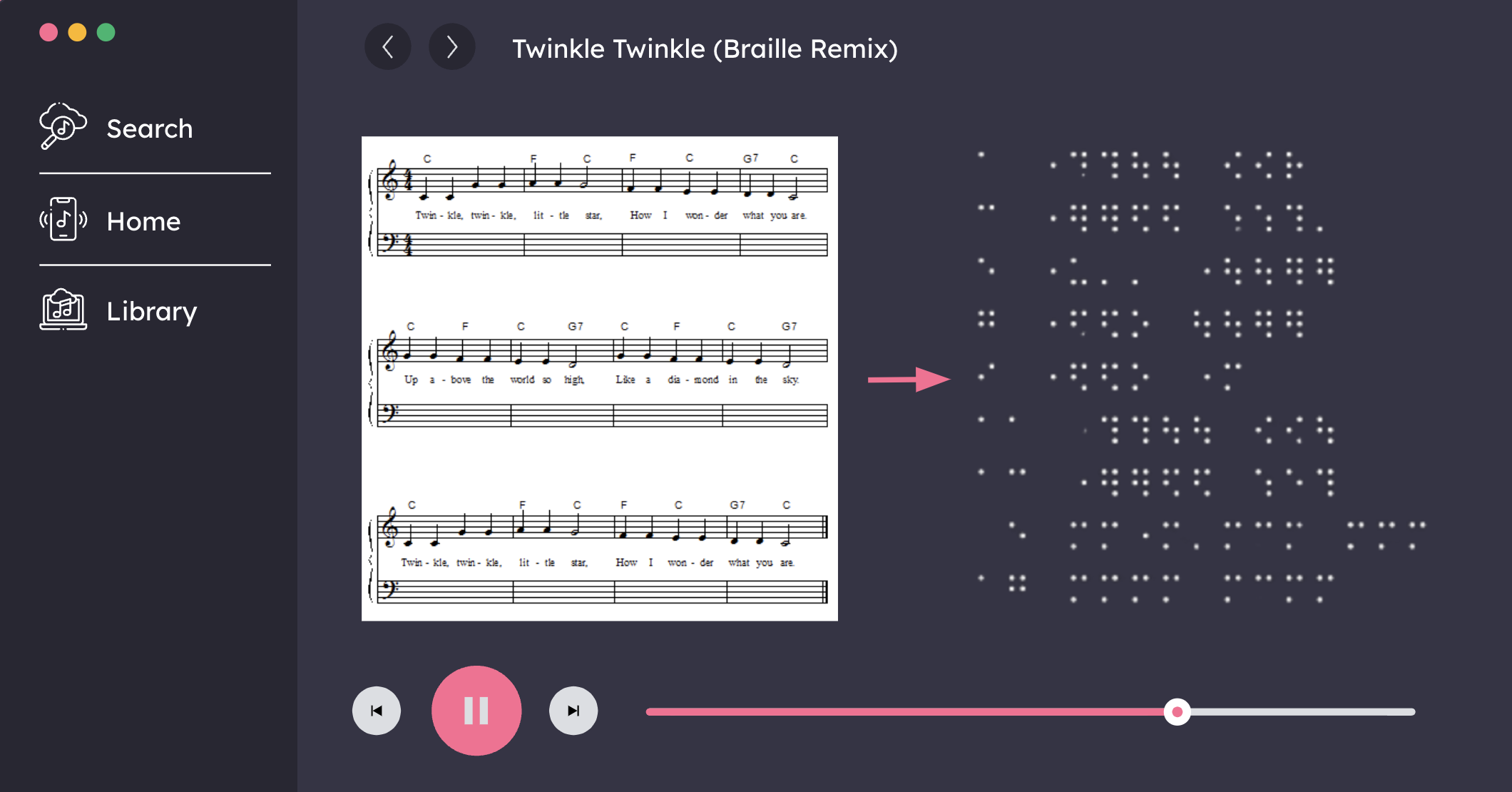

Braille Score is an application that translates the sheet music for visually impaired musicians. It does this using a custom Computer Vision model called OEMER, which creates a braille format for the music. Afterwards, musicians have a variety of ways of understanding the music, from braille sheets to audio for whichever format they best learn through.

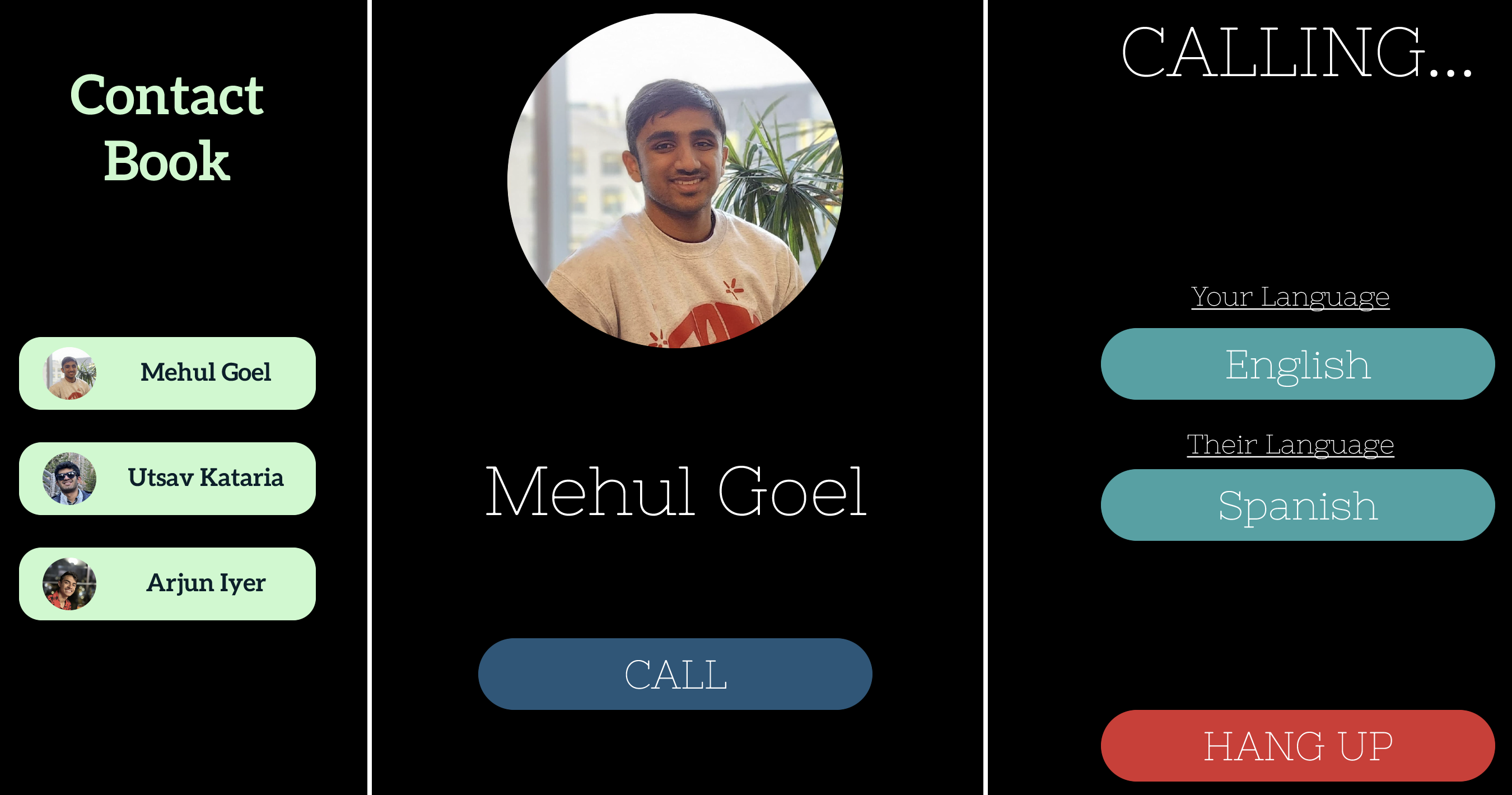

GlobaLex is an application that does real time translation of a call into a different language. To create this application, we built a full web-calling application using Azure cloud services and ReactJS. The authentication is based upon Mongo DB. After receiving an audio stream on the opposite end, using OpenAI's Whisper model, we translate it in 30 second audio chunks.

Eco Bin was a project that focused on improving the environment through auto-sorting waste into recycling, compost, and trash. It comprised of 2 different sensors, a camera for computer vision, alongside of a depth camera to detect the shape of the object. Using this information along with a custom-built deep learning model, the robotic trash can was able to accurately classify 80% of the tested objects, with a 95% accuracy on the training dataset. This software was coupled with a hardware solution that would automatically ingest and sort the respective waste.